Posts by jonas

Quasar at the GPU Technology conference 2016

GPU Technology conference 2016

We’re just back from an interesting GPU Technology Conference (GTC2016), organized by NVIDIA. If you missed us at our shared boot with Cloudalize, or at the boot of Flanders investment and trade, then definitely have a look at our posters.

posters:

- Our first poster (p6172) focuses on the the workflow of the Quasar language and run-time, showing the added value for rapid development and fast execution.

- Our second poster (p6177) shows that Quasar enables high level research in domains such as medical imaging. Here the compact and readable code enables rapid prototyping while the GPU accelerated execution enables now directions of computational intensive research.

Quasar showcase at the ICASSP conference

Come and see us at the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2016) in Shangai, China. We will present several demos on the use of Quasar for rapid prototyping. You can find us at the Emerging Applications and Implementation Technologies session (Tuesday, March 22, 13:30 – 15:30).

Quasar facilitates research on video analysis.

Optical Flow

Simon Donné and his colleagues achieved significant speed-ups for Optical Flow, a widely used video analysis method, thanks to the use of GPUs and Quasar. Tracking an object through time is often still a hard task for a computer. One option is to use optical flow, which finds correspondences between two image frames: which pixel moves where? In general, these techniques compare local neighbourhoods and exploit global information to estimate these correspondences. The authors presented their new approach at the conference for Advanced Concepts for Intelligent Vision Systems (ACIVS) in Catania, Italy.

“Thanks to the Quasar platform we have both a CPU and GPU implementation of the approach. Therefore we achieve a speed-up factor of more than 40 compared to the existing pixel-based method” – ir. Simon Donné

Example

Reference

“Fast and Robust Variational Optical Flow for High-Resolution Images using SLIC Superpixels“; Simon Donné, Jan Aelterman, Bart Goossens, Wilfried Philips in Lecture Notes in Computer Science, Advanced Concepts for Intelligent Vision Systems, 2015

3D Real-time video processing demo

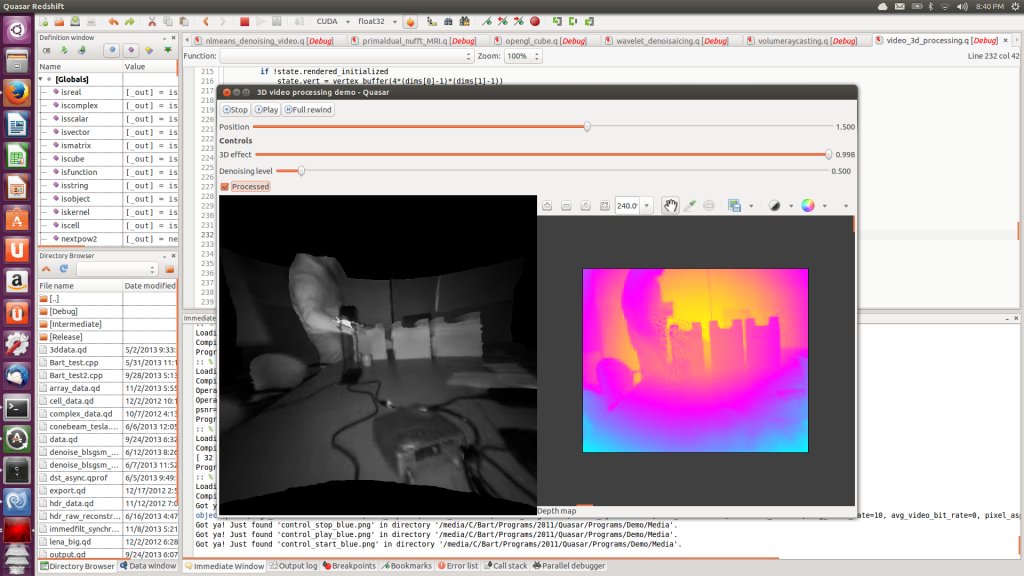

In this post, we demonstrate some of the real-time video processing and visualization capabilities of Quasar. Initially, a monochromatic image and corresponding depth image were captured using a video camera. Because the depth image is originally quite noisy, some additional processing is required. To visualize the results in 3D, we define a fine rectangular mesh in which the z-coordinate of each vertex is set to the depth value at position (x,y). All processing is automatically performed on the GPU and the result is visualized through the integrated OpenGL support in Quasar.

Ubuntu screenshot of the depth image denoising application

The demonstration video first shows the different parts of this video processing algorithm: first the non-local means denoising algorithm used for processing depth images, second the rendering functionality (through OpenGL vertex buffers) and finally, the user interface code (with sliders, labels and displays).

When the program runs, first a flat 2D image is shown on the left, together with the depth image on the right. By adjusting the “3D” slider, the flat image is extruded to a 3D surface.

It can be seen that there are some problems in the resulting 3D mesh, especially in the vicinity of right hand of the test subject. These problems are caused by reflective properties of the desk lamp standing in between the subject and the camera. By increasing the denoising level of the algorithm, this problem can easily be solved. Although the 3D surface has a much smoother appeareance, the depth image on the right reveals that the depth image is actually over-smoothed. The right balance between smoothing and local surface artifacts can be found by setting the denoising level somewhere “in the middle”.