3D Real-time video processing demo

In this post, we demonstrate some of the real-time video processing and visualization capabilities of Quasar. Initially, a monochromatic image and corresponding depth image were captured using a video camera. Because the depth image is originally quite noisy, some additional processing is required. To visualize the results in 3D, we define a fine rectangular mesh in which the z-coordinate of each vertex is set to the depth value at position (x,y). All processing is automatically performed on the GPU and the result is visualized through the integrated OpenGL support in Quasar.

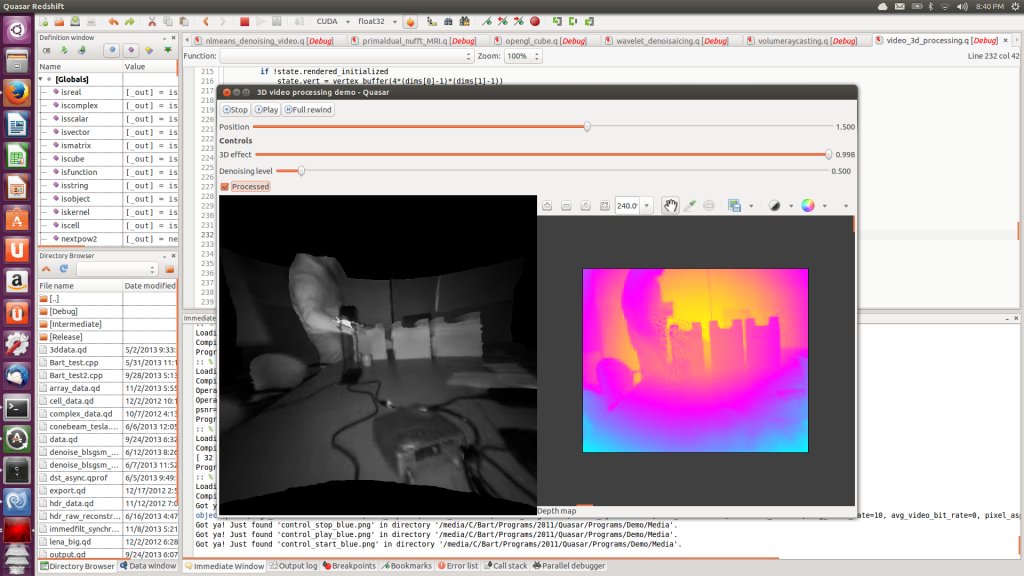

Ubuntu screenshot of the depth image denoising application

The demonstration video first shows the different parts of this video processing algorithm: first the non-local means denoising algorithm used for processing depth images, second the rendering functionality (through OpenGL vertex buffers) and finally, the user interface code (with sliders, labels and displays).

When the program runs, first a flat 2D image is shown on the left, together with the depth image on the right. By adjusting the “3D” slider, the flat image is extruded to a 3D surface.

It can be seen that there are some problems in the resulting 3D mesh, especially in the vicinity of right hand of the test subject. These problems are caused by reflective properties of the desk lamp standing in between the subject and the camera. By increasing the denoising level of the algorithm, this problem can easily be solved. Although the 3D surface has a much smoother appeareance, the depth image on the right reveals that the depth image is actually over-smoothed. The right balance between smoothing and local surface artifacts can be found by setting the denoising level somewhere “in the middle”.